TL;DR:

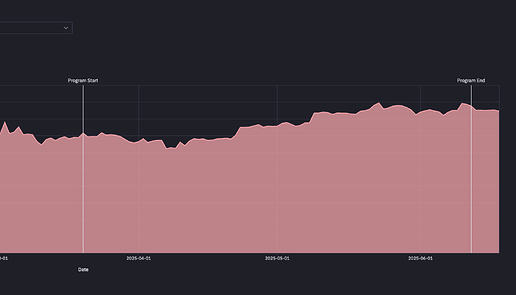

- An observational analysis of S7 Grants Council grants measured OP-normalized ROI using net Superchain TVL inflows between March 20 and June 12, 2025.

- ROI benchmarks emerged at $1.58 (25th percentile), $3.67 (median), and $9.39 (75th percentile) per OP, providing reference points for the evaluation of future grants.

- In total, the net TVL inflows attributable to the TVL-focused grants amounted to $65.3M.

- Observational data suggests that grants generating both strong TVL growth and higher volume per TVL may be indicative of more sustainable net TVL inflows, though conclusions are preliminary.

Context

In S7, the Collective rallied around the North Star metric of Superchain TVL growth, honing in on initiatives most likely to drive outsized impact towards this Intent. Consistent with this concerted effort, it’s crucial to ensure that all Collective programs—ranging from SuperStacks to Futarchy—are assessed in a unified manner using the same framework, paving the way for a universal, OP-normalized ROI analysis.Against this backdrop, we conducted an observational impact analysis of the grants the Grants Council made in S7, which concluded on June 12, 2025. The intended purpose of this post is to contour preliminary learnings to help inform OP allocation decisions in the S8 cycle.

Methodology

The Foundation collaborated with OpenSource Observer to conduct an observational analysis of net TVL inflows between March 20 and June 12, 2025, which represented the defined incentive period for the issued grants. The analysis focused exclusively on grants that targeted Superchain TVL growth, excluding audit grants, which comprised 10.6% (~990K OP) of the total budget.

For the TVL measurement, we relied on the definition outlined in this glossary and leaned on DefiLlama as the primary data source. Furthermore, the TVL measurement was narrowed down to the chains within the Superchain that the grant intended to impact, as per incentive distribution plan outlined in the grant applications.

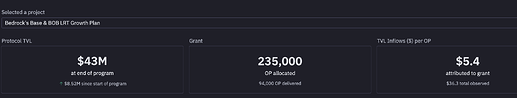

In terms of impact attribution, we tried to account for grant scope specificity and concurrently deployed co-incentives targeting the same chains. Specifically, we incorporated this logic into the TVL calculation by lowering the net TVL inflows attributable to the respective grants if other co-incentives were known—either because it was explicitly mentioned in the grant application or because it was public knowledge (e.g. inclusion in SuperStacks)—or if the grant aimed to only incentivize a small subset of the chain’s total market (e.g. a single stablecoin vault).

For example, Sake Finance had net inflows of $9M during the grant period, but this coincided with Soneium’s ACS campaign (which allocated a total of 70M ASTR to DeFi protocols) as well as SuperStacks (which allocated an additional 2M+ in DeFi incentives across the Superchain). Sake Finance was a participant in all three of these incentive programs. As a result, we apply a discount to the net TVL inflows that are attributable to the Grants Council program.

While perfect TVL attribution is very hard to achieve, factoring in known confounding variables could help us better approximate reality. We erred on the side of providing more conservative estimates initially. Note that the Foundation applies exactly the same attribution logic to any internal analyses of Foundation-owned programs.

Key Findings

To standardize TVL measurement, we aggregated results to establish baseline ROI benchmarks that can be used across seasons. At the aggregate level, S7 GC grants achieved $9.39 in net TVL inflows at the 75th percentile and $1.58 at the 25th percentile), offering reference points for future ROI evaluations.

Note that these benchmarks should not be seen as an end-all-be-all measuring stick for future grants, but instead could serve as a proxy for evaluating future performance. For context, these results fall below benchmarks from previous growth grants (P75: $37.93; P50: $13.83; P25: $0.73), as determined by an analysis from the data team at OP Labs.

In total, the net TVL inflows attributable to the TVL-focused grants amounted to $65.3M. To put this into perspective, preliminary results from SuperStacks—a DeFi incentive campaign run by the Foundation—indicate that the program has brought in $73.4 in net TVL inflows per OP as of last week.

| S7 GC Grants | Net TVL Inflows / OP At Program End (n = 17) |

|---|---|

| 75th percentile | $9.39 |

| 50th percentile (median) | $3.67 |

| 25th percentile | $1.58 |

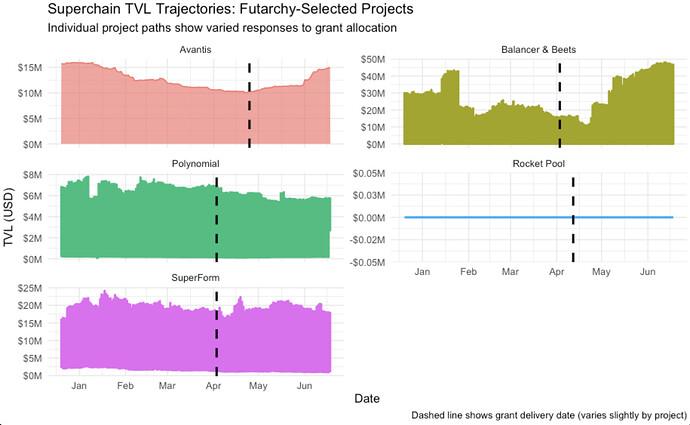

When taking the project-level ROI distribution under the microscope, it becomes apparent that net TVL inflows per OP follow a power law dynamic, with Morpho’s World grant yielding $44 / OP in attributable net TVL inflows and Euler’s grant achieving $24 / OP, while most other grants hover in the $1-10 / OP range.

Interestingly, top performing grants like Aerodrome and Uniswap showed not only notable TVL inflows, which indicates supply-side growth, but also high daily volume per TVL ($0.58 - $0.63), commonly used to gauge demand-side activity. In contrast, projects with lower net TVL inflows / OP demonstrated lower daily volume per TVL ($0.004 - $0.40).

Taken together, this might suggest that healthy demand-side activity in conjunction with significant supply-side traction might be indicative of successful DeFi ecosystem momentum. Note that this observation is based on a very small sample size and does by no means imply a causal relationship.

Conclusion

This preliminary analysis offers a first pass at understanding how effectively S7 Grants Council allocations translated into Superchain TVL growth. While attribution remains inherently complex, the introduction of OP-normalized ROI benchmarks—$1.58, $3.67, and $9.39 at the 25th, 50th, and 75th percentiles—provides a foundational reference point for evaluating future grants.

Early signals suggest that projects demonstrating both strong net TVL inflows and high volume per TVL may contribute more meaningfully to ecosystem growth. These insights, though directional, can help inform more data-driven OP allocation decisions in S8.